Quicksearch

Your search for reimer returned 5 results:

Wednesday, February 23. 2011

Critique of McCabe & Snyder: Online not= OA, and OA not= OA journal

Comments on:

Comments on: The following quotes are from McCabe, MJ (2011) Online access versus open access. Inside Higher Ed. February 10, 2011.McCabe, MJ & Snyder, CM (2011) Did Online Access to Journals Change the Economics Literature?

Abstract: Does online access boost citations? The answer has implications for issues ranging from the value of a citation to the sustainability of open-access journals. Using panel data on citations to economics and business journals, we show that the enormous effects found in previous studies were an artifact of their failure to control for article quality, disappearing once we add fixed effects as controls. The absence of an aggregate effect masks heterogeneity across platforms: JSTOR boosts citations around 10%; ScienceDirect has no effect. We examine other sources of heterogeneity including whether JSTOR benefits "long-tail" or "superstar" articles more.

MCCABE: …I thought it would be appropriate to address the issue that is generating some heat here, namely whether our results can be extrapolated to the OA environment….If "selection bias" refers to authors' bias toward selectively making their better (hence more citeable) articles OA, then this was controlled for in the comparison of self-selected vs. mandated OA, by Gargouri et al (2010) (uncited in the McCabe & Snyder (2011) [M & S] preprint, but known to the authors -- indeed the first author requested, and received, the entire dataset for further analysis: we are all eager to hear the results).

1. Selection bias and other empirical modeling errors are likely to have generated overinflated estimates of the benefits of online access (whether free or paid) on journal article citations in most if not all of the recent literature.

If "selection bias" refers to the selection of the journals for analysis, I cannot speak for studies that compare OA journals with non-OA journals, since we only compare OA articles with non-OA articles within the same journal. And it is only a few studies, like Evans and Reimer's, that compare citation rates for journals before and after they are made accessible online (or, in some cases, freely accessible online). Our principal interest is in the effects of immediate OA rather than delayed or embargoed OA (although the latter may be of interest to the publishing community).

MCCABE: 2. There are at least 2 “flavors” found in this literature: 1. papers that use cross-section type data or a single observation for each article (see for example, Lawrence (2001), Harnad and Brody (2004), Gargouri, et. al. (2010)) and 2. papers that use panel data or multiple observations over time for each article (e.g. Evans (2008), Evans and Reimer (2009)).We cannot detect any mention or analysis of the Gargouri et al. paper in the M & S paper…

MCCABE: 3. In our paper we reproduce the results for both of these approaches and then, using panel data and a robust econometric specification (that accounts for selection bias, important secular trends in the data, etc.), we show that these results vanish.We do not see our results cited or reproduced. Does "reproduced" mean "simulated according to an econometric model"? If so, that is regrettably too far from actual empirical findings to be anything but speculations about what would be found if one were actually to do the empirical studies.

MCCABE: 4. Yes, we “only” test online versus print, and not OA versus online for example, but the empirical flaws in the online versus print and the OA versus online literatures are fundamentally the same: the failure to properly account for selection bias. So, using the same technique in both cases should produce similar results.Unfortunately this is not very convincing. Flaws there may well be in the methodology of studies comparing citation counts before and after the year in which a journal goes online. But these are not the flaws of studies comparing citation counts of articles that are and are not made OA within the same journal and year.

Nor is the vague attribution of "failure to properly account for selection bias" very convincing, particularly when the most recent study controlling for selection bias (by comparing self-selected OA with mandated OA) has not even been taken into consideration.

Conceptually, the reason the question of whether online access increases citations over offline access is entirely different from the question of whether OA increases citations over non-OA is that (as the authors note), the online/offline effect concerns ease of access: Institutional users have either offline access or online access, and, according to M & S's results, in economics, the increased ease of accessing articles online does not increase citations.

This could be true (although the growth across those same years of the tendency in economics to make prepublication preprints OA [harvested by RepEc] through author self-archiving, much as the physicists had started doing a decade earlier in Arxiv, and computer scientists started doing even earlier [later harvested by Citeseerx] could be producing a huge background effect not taken into account at all in M & S's painstaking temporal analysis (which itself appears as an OA preprint in SSRN!).

But any way one looks at it, there is an enormous difference between comparing easy vs. hard access (online vs. offline) and comparing access with no access. For when we compare OA vs non-OA, we are taking into account all those potential users that are at institutions that cannot afford subscriptions (whether offline or online) to the journal in which an article appears. The barrier, in other words (though one should hardly have to point this out to economists), is not an ease barrier but a price barrier: For users at nonsubscribing institutions, non-OA articles are not just harder to access: They are impossible to access -- unless a price is paid.

(I certainly hope that M & S will not reply with "let them use interlibrary loan (ILL)"! A study analogous to M & S's online/offline study comparing citations for offline vs. online vs. ILL access in the click-through age would not only strain belief if it too found no difference, but it too would fail to address OA, since OA is about access when one has reached the limits of one's institution's subscription/license/pay-per-view budget. Hence it would again miss all the usage and citations that an article would have gained if it had been accessible to all its potential users and not just to those whose institutions could afford access, by whatever means.)

It is ironic that M & S draw their conclusions about OA in economic terms (and, predictably, as their interest is in modelling publication economics) in terms of the cost/benefits, for an author, of paying to publish in an OA journal. concluding that since they have shown it will not generate more citations, it is not worth the money.

But the most compelling findings on the OA citation advantage come from OA author self-archiving (of articles published in non-OA journals), not from OA journal publishing. Those are the studies that show the OA citation advantage, and the advantage does not cost the author a penny! (The benefits, moreover, accrue not only to authors and users, but to their institutions too, as the economic analysis of Houghton et al shows.)

And the extra citations resulting from OA are almost certainly coming from users for whom access to the article would otherwise have been financially prohibitive. (Perhaps it's time for more econometric modeling from the user's point of view too…)

I recommend that M & S look at the studies of Michael Kurtz in astrophysics. Those, too, included sophisticated long-term studies of the effect of the wholesale switch from offline to online, and Kurtz found that total citations were in fact slightly reduced, overall, when journals became accessible online! But astrophysics, too, is a field in which OA self-archiving is widespread. Hence whether and when journals go online is moot, insofar as citations are concerned.

(The likely hypothesis for the reduced citations -- compatible also with our own findings in Gargouri et al -- is that OA levels the playing field for users: OA articles are accessible to all their potential usesr, not just to those whose institutions can afford toll access. As a result, users can self-selectively decide to cite only the best and most relevant articles of all, rather than having to make do with a selection among only the articles to which their institutions can afford toll access. One corollary of this [though probably also a spinoff of the Seglen/Pareto effect] is that the biggest beneficiaries of the OA citation advantage will be the best articles. This is a user-end -- rather than an author-end -- selection effect...)

MCCABE: 5. At least in the case of economics and business titles, it is not even possible to properly test for an independent OA effect by specifically looking at OA journals in these fields since there are almost no titles that switched from print/online to OA (I can think of only one such title in our sample that actually permitted backfiles to be placed in an OA repository). Indeed, almost all of the OA titles in econ/business have always been OA and so no statistically meaningful before and after comparisons can be performed.The multiple conflation here is so flagrant that it is almost laughable. Online ≠ OA and OA ≠ OA journal.

First, the method of comparing the effect on citations before vs. after the offline/online switch will have to make do with its limitations. (We don't think it's of much use for studying OA effects at all.) The method of comparing the effect on citations of OA vs. non-OA within the same (economics/business, toll-access) journals can certainly proceed apace in those disciplines, the studies have been done, and the results are much the same as in other disciplines.

M & S have our latest dataset: Perhaps they would care to test whether the economics/business subset of it is an exception to our finding that (a) there is a significant OA advantage in all disciplines, and (b) it's just as big when the OA is mandated as when it is self-selected.

MCCABE: 6. One alternative, in the case of cross-section type data, is to construct field experiments in which articles are randomly assigned OA status (e.g. Davis (2008) employs this approach and reports no OA benefit).And another one -- based on an incomparably larger N, across far more fields -- is the Gargouri et al study that M & S fail to mention in their article, in which articles are mandatorily assigned OA status, and for which they have the full dataset in hand, as requested.

MCCABE: 7. Another option is to examine articles before and after they were placed in OA repositories, so that the likely selection bias effects, important secular trends, etc. can be accounted for (or in economics jargon, “differenced out”). Evans and Reimer’s attempt to do this in their 2009 paper but only meet part of the econometric challenge.M & S are rather too wedded to their before/after method and thinking! The sensible time for authors to self-archive their papers is immediately upon acceptance for publication. That's before the published version has even appeared. Otherwise one is not studying OA but OA embargo effects. (But let me agree on one point: Unlike journal publication dates, OA self-archiving dates are not always known or taken into account; so there may be some drift there, depending on when the author self-archives. The solution is not to study the before/after watershed, but to focus on the articles that are self-archived immediately rather than later.)

Stevan Harnad

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLOS ONE 5 (10). e13636

Harnad, S. (2010) The Immediate Practical Implication of the Houghton Report: Provide Green Open Access Now. Prometheus 28 (1): 55-59.

Sunday, July 19. 2009

Science Magazine: Letters About the Evans & Reimer Open Access Study

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

Five months after the fact, this week's Science Magazine has just published four letters and a response about Evans & Reimer's Open Access and Global Participation in Science, Science 20 February 2009: 1025.

Five months after the fact, this week's Science Magazine has just published four letters and a response about Evans & Reimer's Open Access and Global Participation in Science, Science 20 February 2009: 1025. You might want to also take a peek at these three rather more detailed critiques that Science did not publish...:

"Open Access Benefits for the Developed and Developing World: The Harvards and the Have-Nots"Stevan Harnad

"The Evans & Reimer OA Impact Study: A Welter of Misunderstandings"

"Perils of Press-Release Journalism: NSF, U. Chicago, and Chronicle of Higher Education"

American Scientist Open Access Forum

Wednesday, February 25. 2009

Perils of Press-Release Journalism: NSF, U. Chicago, and Chronicle of Higher Education

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

In response to my critique of his Chronicle of Higher Education posting on Evans and Reimer's (2009) Science article (which I likewise critiqued, though much more mildly), I got an email from Paul Basken asking me to explain what, if anything, he had got wrong, since his posting was based entirely on a press release from NSF (which turns out to be a relay of a press release from the University of Chicago, E & R's home institution). Sure enough, the silly spin originated from the NSF/Chicago Press release (though the buck stops with E & R's own vague and somewhat tendentious description and interpretation of some of their findings). Here is the NSF/Chicago Press Release, enhanced with my comments, for your delectation and verdict:

In response to my critique of his Chronicle of Higher Education posting on Evans and Reimer's (2009) Science article (which I likewise critiqued, though much more mildly), I got an email from Paul Basken asking me to explain what, if anything, he had got wrong, since his posting was based entirely on a press release from NSF (which turns out to be a relay of a press release from the University of Chicago, E & R's home institution). Sure enough, the silly spin originated from the NSF/Chicago Press release (though the buck stops with E & R's own vague and somewhat tendentious description and interpretation of some of their findings). Here is the NSF/Chicago Press Release, enhanced with my comments, for your delectation and verdict:NSF/U.CHICAGO:(1) If you offer something valuable for free, people will choose the free option unless they've already paid for the paid option (especially if they needed -- and could afford -- it earlier).

"If you offer something of value to people for free while someone else charges a hefty sum of money for the same type of product, one would logically assume that most people would choose the free option. According to new research in today's edition of the journal Science, if the product in question is access to scholarly papers and research, that logic might just be wrong. These findings provide new insight into the nature of scholarly discourse and the future of the open source publication movement [sic, emphasis added]."

(2) Free access after an embargo of a year or more is not the same "something" as immediate free access. Its "value" for a potential user is lower. (That's one of the reasons institutions keep paying for subscription/license access to journals.)

(3) Hence it is not in the least surprising that immediate (paid) print-on-paper access + online access (IP + IO) generates more citations than immediate (paid) print-on-paper access (IP) alone.

(4) Nor is it surprising that immediate (paid) print-on-paper access + online access + delayed free online access (IP +IO + DF) generates more citations than just immediate (paid) print-on-paper + online access (IP + IO) alone -- even if the free access is provided a year or longer after the paid access.

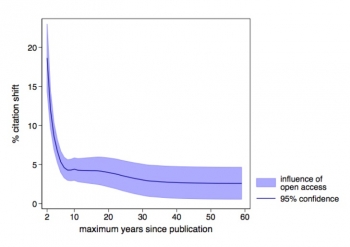

(5) Why on earth would anyone conclude that the fact that the increase in citations from IP to IP + IO is 12% and the increase in citations from IP + IO to IP + IO + DF is a further 8% implies anything whatsoever about people's preference for paid access over free access? Especially when the free access is not even immediate (IF) but delayed (DF) and the 8% is an underestimate based on averaging in ancient articles: see E & R's supplemental Figure S1(c), right [with thanks to Mike Eisen for spotting this one!].

(5) Why on earth would anyone conclude that the fact that the increase in citations from IP to IP + IO is 12% and the increase in citations from IP + IO to IP + IO + DF is a further 8% implies anything whatsoever about people's preference for paid access over free access? Especially when the free access is not even immediate (IF) but delayed (DF) and the 8% is an underestimate based on averaging in ancient articles: see E & R's supplemental Figure S1(c), right [with thanks to Mike Eisen for spotting this one!].NSF/U.CHICAGO:What on earth is an "open source outlet"? ("Open source" is a software matter.) Let's assume what's meant is "open access"; but then is this referring to (i) publishing in an open access journal, to (ii) publishing in a subscription journal but also self-archiving the published article to make it open access, or to (iii) self-archiving an unpublished paper?

"Most research is published in scientific journals and reviews, and subscriptions to these outlets have traditionally cost money--in some cases a great deal of money. Publishers must cover the costs of producing peer-reviewed publications and in most cases also try to turn a profit. To access these publications, other scholars and researchers must either be able to afford subscriptions or work at institutions that can provide access.

"In recent years, as the Internet has helped lower the cost of publishing, more and more scientists have begun publishing their research in open source [sic] outlets online. Since these publications are free to anyone with an Internet connection, the belief has been that more interested readers will find them and potentially cite them. Earlier studies had postulated that being in an open source [sic] format could more than double the number of times a journal article is used by other researchers."

What (many) previous studies had measured (not "postulated") was that authors (ii) publishing in a subscription journal (IP + IO) and also self-archiving their published article to make it Open Access (IP + IO + OA) could more than double their citations, compared to IP + IO alone.

NSF/U.CHICAGO:No, Evans & Reimer (E & R) did nothing of the sort; and no "theory" was tested (nor was there any theory).

"To test this theory, James A. Evans, an assistant professor of sociology at the University of Chicago, and Jacob Reimer, a student of neurobiology also at the University of Chicago, analyzed millions of articles available online, including those from open source publications [sic] and those that required payment to access."

E & R only analyzed articles from subscription access journals before and after the journals made them accessible online (to paid subscribers only) (i.e., IP vs IP + IO) as well as before and after the journals made the online version accessible free for all (after a paid-access-only embargo of up to a year or more: i.e., IP +IO vs IP + IO + DF). E & R's methodology was based on comparing citation counts for articles within the same journals before and after being made free online (by the journal) following delays of various lengths.

NSF/U.CHICAGO:In other words, the citation count increase from just (paid) IP to (paid) IP + IO was 12% and the citation count increase from just (paid) IP + IO to (paid) IP + IO + DF was a further 8%. Not in the least surprising: Making paid-access articles accessible online increases their citations, and making them free online (even if only after a delay of a year or longer) increases their citations still more.

"The results were surprising. On average, when a given publication was made available online after being in print for a year, being published in an open source format [sic] increased the use of that article by about 8 percent. When articles are made available online in a commercial format a year after publication, however, usage increases by about 12 percent."

What is surprising is the rather absurd spin that this press release appears to be trying to put on this decidedly unsurprising finding.

NSF/U.CHICAGO:We already knew that OA increased citations, as the many prior published studies have shown. Most of those studies, however, were based on immediate OA (i.e., IF), not embargoed OA. What E & R do show, interestingly, is that even delaying OA for a year or more still increases citations, though (unsurprisingly) not as much as immediate OA (IF) does.

"'Across the scientific community,' Evans said in an interview, 'it turns out that open access does have a positive impact on the attention that's given to the journal articles, but it's a small impact.'"

NSF/U.CHICAGO:A large portion of the citation increase from (delayed) OA turns out to come from Developing Countries (refuting Frandsen's recent report to the contrary). This is a new and useful finding (though hardly a surprising one, if one does the arithmetic). (A similar analysis, within the US, comparing citations from America's own "Have-Not" Universities (with the smaller journal subscription budgets) with its Harvards might well reveal the same effect closer to home, though probably at a smaller scale.)

"Yet Evans and Reimer's research also points to one very positive impact of the open source movement [sic] that is sometimes overlooked in the debate about scholarly publications. Researchers in the developing world, where research funding and libraries are not as robust as they are in wealthier countries, were far more likely to read and cite open source articles."

NSF/U.CHICAGO:And it will be interesting to test for the same effect comparing the Harvards and the Have-Nots in the US -- but a more realistic estimate might come from looking at immediate OA (IF) rather than just embargoed OA (DF).

"The University of Chicago team concludes that outside the developed world, the open source movement [sic] 'widens the global circle of those who can participate in science and benefit from it.'"

NSF/U.CHICAGO:It would be interesting to hear the authors of this NSF/Chicago press release -- or E & R, for that matter -- explain how this paradoxical "preference" for paid access over free access was tested during the access embargo period...

"So while some scientists and scholars may chose to pay for scientific publications even when free publications are available, their colleagues in other parts of the world may find that going with open source works [sic] is the only choice they have."

Stevan Harnad

American Scientist Open Access Forum

Tuesday, February 24. 2009

The Evans & Reimer OA Impact Study: A Welter of Misunderstandings

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

Basken, Paul (2009) Fee-Based Journals Get Better Results, Study in Fee-Based Journal Reports. Chronicle of Higher Education February 23, 2009(Re: Paul Basken) No, the Evans & Reimer (E & R) study in Science does not show that

"researchers may find a wider audience if they make their findings available through a fee-based Web site rather than make their work freely available on the Internet."This is complete nonsense, since the "fee-based Web site" is immediately and fully accessible -- to all those who can and do pay for access in any case. (It is simply the online version of the journal; for immediate permanent access to it, an individual or institution pays a subscription or license fee.) The free version is extra: a supplement to that fee-based online version, not an alternative to it: it is provided for those would-be users who cannot afford the access-fee. In E & R's study, the free access is provided -- after an access-embargo of up to a year or more -- by the journal itself. In studies by others, the free access is provided by the author, depositing the final refereed draft of the article on his own website, free for all (usually immediately, with no prior embargo). E & R did not examine the latter form of free online access at all. (Paul Basken has confused (1) the size of the benefits of fee-based online access over fee-based print-access alone with (2) the size of the benefits of free online access over fee-based online-access alone. The fault is partly E & R's for describing their findings in such an equivocal way.)

(Re: Phil Davis) No, E & R do not show that

"the effect of OA on citations may be much smaller than originally reported."E & R show that the effect of free access on citations after an access-embargo (fee-based access only) of up to a year or longer is much smaller than the effect of the more immediate OA that has been widely reported.

(Re: Phil Davis) No, E & R do not show that

"the vast majority of freely-accessible scientific articles are not published in OA journals, but are made freely available by non-profit scientific societies using a subscription model."E & R did not even look at the vast majority of current freely-accessible articles (per year), which are the ones self-archived by their authors. E & R looked only at journals that make their entire contents free after an access-embargo of up to a year or more. (Cumulative back-files will of course outnumber any current year, but what current research needs, especially in fast-moving fields, is immediate access to current, ongoing research, not just legacy research.)

See: "Open Access Benefits for the Developed and Developing World: The Harvards and the Have-Nots"

Stevan Harnad

American Scientist Open Access Forum

Thursday, February 19. 2009

Open Access Benefits for the Developed and Developing World: The Harvards and the Have-Nots

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

The portion of Evans & Reimer's (2009) study (E & R) is valid is timely and useful, showing that a large portion of the Open Access citation impact advantage comes from providing the developing world with access to the research produced by the developed world. Using a much bigger database, E & R refute (without citing!) a recent flawed study (Frandsen 2009) that reported that there was no such effect (as well as a premature response hailing it as "Open Access: No Benefit for Poor Scientists").

SUMMARY: Evans & Reimer (2009) (E & R) show that a large portion of the increased citations generated by making articles freely accessible online ("Open Access," OA) comes from Developing-World authors citing OA articles more.It is very likely that a within-US comparison based on the same data would show much the same effect: making articles OA should increase citations from authors at the Have-Not universities (with the smaller journal subscription budgets) more than from Harvard authors. Articles by Developing World (and US Have-Not) authors should also be cited more if they are made OA, but the main beneficiaries of OA will be the best articles, wherever they are published. This raises the question of how many citations – and how much corresponding research uptake, usage, progress and impact – are lost when articles are embargoed for 6-12 months or longer by their publishers against being made OA by their authors.

(It is important to note that E & R's results are not based on immediate OA but on free access after an embargo of up to a year or more. Theirs is not an estimate of the increase in citation impact that results from immediate Open Access; it is just the increase that results from ending Embargoed Access. In a fast-moving field of science, an access lag of a year can lose a lot of research impact, permanently.)

E & R found the following. (Their main finding is number #4):

#1 When articles are made commercially available online their citation impact becomes greater than when they were commercially available only as print-on-paper. (This is unsurprising, since online access means easier and broader access than just print-on-paper access.)

#2 When articles are made freely available online their citation impact becomes greater than when they were not freely available online. (This confirms the widely reported "Open Access" (OA) Advantage.)

(E & R cite only a few other studies that have previously reported the OA advantage, stating that those were only in a few fields, or within just one journal. This is not correct; there have been many other studies that likewise reported the OA advantage, across nearly as many journals and fields as E & R sampled. E & R also seem to have misunderstood the role of prepublication preprints in those fields (mostly physics) that effectively already have post-publication OA. In those fields, all of the OA advantage comes from the year(s) before publication -- "the Early OA Advantage", which is relevant to the question, raised below, about the harmful effects of access embargoes. And last, E&R cite the few negative studies that have been published -- mostly the deeply flawed studies of Phil Davis -- that found no OA Advantage or even a negative effect (as if making papers freely available reduced their citations!).#3 The citation advantage of commercial online access over commercial print-only access is greater than the citation advantage of free access over commercial print plus online access only. (This too is unsurprising, but it is also somewhat misleading, because virtually all journals have commercial online access today: hence the added advantage of free online access is something that occurs over and above mere online (commercial) access -- not as some sort of competitor or alternative to it! The comparison today is toll-based online access vs. free online access.)

(There may be some confusion here between the size of the OA advantage for journals whose contents were made free online after a pospublication embargo period, versus those whose contents were made free online immediately upon publication -- i.e., the OA journals. Commercial online access is of course never embargoed: you get access as soon as its paid for! Previous studies have made within-journal comparisons, field by field, between OA and non-OA articles within the same journal and year. These studies found much bigger OA Advantages because they were comparing like with like and because they were based on a longer time-span: The OA advantage is still small after only a year, because it takes time for citations to build up; this is even truer if the article becomes "OA" only after it has been embargoed for a year or longer!)#4 The OA Advantage is far bigger in the Developing World (i.e., Developing-World first-authors, when they cite OA compared to non-OA articles). This is the main finding of this article, and this is what refutes the Frandsen study.

What E & R have not yet done (and should!) is to check for the very same effect, but within the Developed World, by comparing the "Harvards vs. the Have-Nots" within, say the US: The ARL has a database showing the size of the journal holdings of most research university libraries in the US. Analogous to their comparison's between Developed and Developing countries, E & R could split the ARL holdings into 10 deciles, as they did with the wealth (GNI) of countries. I am almost certain this will show that a large portion of the OA impact advantage in the US comes from the US's "Have-Nots", compared to its Harvards.

The other question is the converse: The OA advantage for articles authored (rather than cited) by Developing World authors. OA does not just give the Developing World more access to the input it needs (mostly from the Developed World), as E & R showed; but OA also provides more impact for the Developing World's research output, by making it more widely accessible (to both the Developing and Developed world) -- something E & R have not yet looked at either, though they have the data! Because of what Seglen (1992) called the "skewness of science," however, the biggest beneficiaries of OA will of course be the best articles, wherever their authors: 90% of citations go to the top 10% of articles.

Last, there is the crucial question of the effect of access embargoes. It is essential to note that E & R's results are not based on immediate OA but on free access after an embargo of up to a year or more. Theirs is hence not an estimate of the increase in citation impact that results from immediate Open Access; it is just the increase that results from ending Embargoed Access.

It will be important to compare the effect of OA on embargoed versus unembargoed content, and to look at the size of the OA Advantage after an interval of longer than just a year. (Although early access is crucial in some fields, citations are not instantaneous: it may take a few years' work to generate the cumulative citation impact of that early access. But it is also true in some fast-moving fields that the extra momentum lost during a 6-12-month embargo is never really recouped.)

Evans, JA & Reimer, J. (2009) Open Access and Global Participation in Science Science 323(5917) (February 20 2009)Stevan Harnad

Hajjem, C., Harnad, S. and Gingras, Y. (2005) Ten-Year Cross-Disciplinary Comparison of the Growth of Open Access and How it Increases Research Citation Impact. IEEE Data Engineering Bulletin 28(4) pp. 39-47.

Seglen PO (1992) The skewness of science. Journal of the American Society for Information Science 43:628-38

American Scientist Open Access Forum

(Page 1 of 1, totaling 5 entries)

EnablingOpenScholarship (EOS)

Quicksearch

Syndicate This Blog

Materials You Are Invited To Use To Promote OA Self-Archiving:

Videos:

audio WOS

Wizards of OA -

audio U Indiana

Scientometrics -

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The American Scientist Open Access Forum has been chronicling and often directing the course of progress in providing Open Access to Universities' Peer-Reviewed Research Articles since its inception in the US in 1998 by the American Scientist, published by the Sigma Xi Society.

The Forum is largely for policy-makers at universities, research institutions and research funding agencies worldwide who are interested in institutional Open Acess Provision policy. (It is not a general discussion group for serials, pricing or publishing issues: it is specifically focussed on institutional Open Acess policy.)

You can sign on to the Forum here.

Archives

Calendar

|

|

May '21 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | |||||

| 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 17 | 18 | 19 | 20 | 21 | 22 | 23 |

| 24 | 25 | 26 | 27 | 28 | 29 | 30 |

| 31 | ||||||

Categories

Blog Administration

Statistics

Last entry: 2018-09-14 13:27

1129 entries written

238 comments have been made